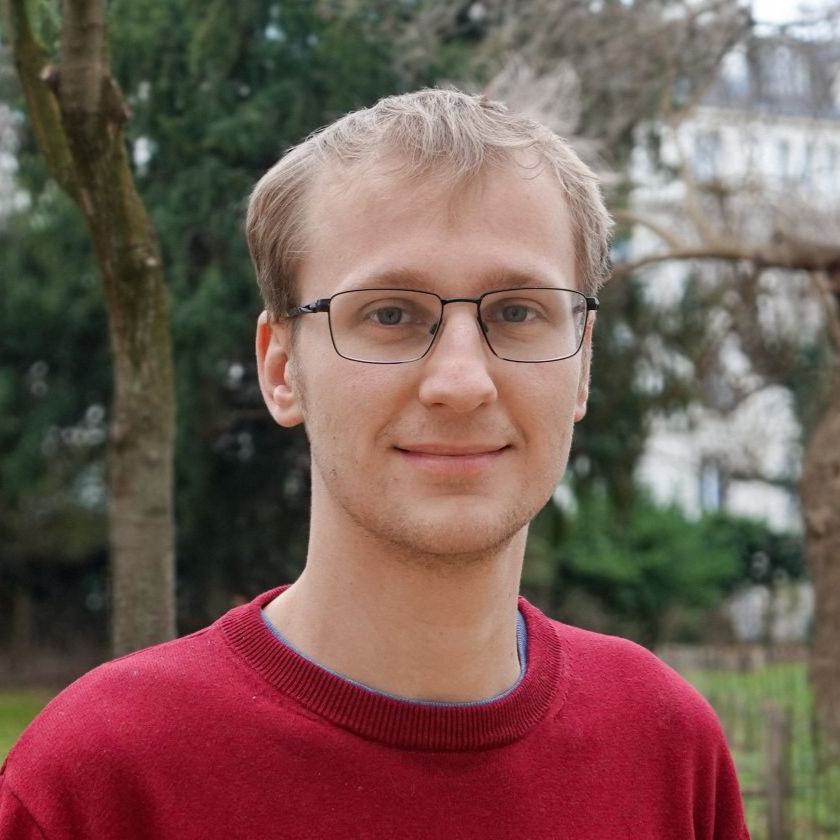

E-Mail: johannes.hertrich@ens.fr

ENS Paris, France

About me

I am a postoc on a Walter Benjamin Fellowship awarded by the German Research Foundation (DFG) working at ENS Paris and Inria Mokaplan with Antonin Chambolle and Julie Delon. My research interests include inverse problems, optimal transport, nonlinear optimization and machine learning with applications in mathematical image processing. My project for the Walter Benjamin Fellowship is titled Variational Gradient Flows on Probability Spaces and Generative Models for Bayesian Inverse Problems in Image Processing.

Previously, I have been postdoc at the University College London within the Maths4DL project in the group of Simon Arridge. I completed my PhD in 2023 at TU Berlin supervised by Gabriele Steidl for which I received the Dr.-Klaus-Körper Prize 2024 of the Association of Applied Mathematics and Mechanics (GAMM) and the PhD award 2024 of the Eurasian Association on Inverse Problems (EAIP). I obtained my BSc and MSc degrees in Mathematics in 2018 and 2020 from TU Kaiserslautern (renamed in 2023 to RPTU). During my BSc and MSc I was supported by a scholarship of the Felix Klein Center for Mathematics.

My brother Christoph is a professor for applied discrete mathematics at the University of Technology Nuremberg.

Publications

Preprints

- N. Rux, J. Hertrich and S. Neumayer (2025).

Numerical Methods for Kernel Slicing.

(arXiv Preprint#2510.11478)

[arxiv], [code]

Journal/Conference Papers

- J. Hertrich, H. S. Wong, A. Denker, S. Ducotterd, Z. Fang, M. Haltmeier, Z. Kereta, E. Kobler, O. Leong, M. S. Salehi, C.-B. Schönlieb, J. Schwab, Z. Shumaylov, J. Sulam, G. S. Wache, M. Zach, Y. Zhang, M. J. Ehrhardt and S. Neumayer (to appear).

Learning Regularization Functionals for Inverse Problems: A Comparative Study.

Accepted in: Handbook of Numerical Analysis

[arxiv], [code] - R. Duong, V. Stein, R. Beinert, J. Hertrich and G. Steidl (2026).

Wasserstein Gradient Flows of MMD Functionals with Distance Kernel and Cauchy Problems on Quantile Functions.

ESAIM: Control, Optimisation and Calculus of Variations, vol. 32, no. 10.

[doi], [arxiv], [code] - J. Tachella, M. Terris, S. Hurault, A. Wang, D. Chen, M.-H. Nguyen, M. Song, T. Davies, L. Davy, J. Dong, P. Escande, J. Hertrich, Z. Hu, T. Liaudat, N. Laurent, B. Levac, M. Massias, T. Moreau, T. Mordzyk, B. Monroy, S. Neumayer, J. Scanvic, F. Sarron, V. Sechaud, G. Schramm, R. Vo and P. Weiss (2025).

DeepInverse: A Python Package for Solving Imaging Inverse Problems with Deep Learning.

Journal of Open Source Software, vol. 10, no. 115.

[doi], [arxiv], [code], [documentation] - J. Hertrich, A. Chambolle and J. Delon (2025).

On the Relation between Rectified Flows and Optimal Transport.

Conference on Neural Information Processing Systems (NeurIPS 2025).

[www], [arxiv] - J. Hertrich and S. Neumayer (2025).

Generative Feature Training of Thin 2-Layer Networks.

Transactions on Machine Learning Research (TMLR).

[www], [arxiv], [code] - J. Hertrich and R. Gruhlke (2025).

Importance Corrected Neural JKO Sampling.

International Conference on Machine Learning (ICML 2025).

Proceedings of Machine Learning Research, vol. 267, pp. 23083-23119.

[www], [arxiv], [code] - A. Denker, S. Padhy, F. Vargas and J. Hertrich (2025).

Iterative Importance Fine-Tuning of Diffusion Models.

ICLR 2025 Workshop on Frontiers in Probabilistic Inference: Sampling Meets Learning.

[www], [arxiv] - A. Denker, J. Hertrich, Z. Kereta, S. Cipiccia, E. Erin and S. Arridge (2025).

Plug-and-Play Half-Quadratic Splitting for Ptychography.

T. Bubba, R. Gaburro, S. Gazzola, K. Papafitsoros, M. Pereyra and C.B. Schönlieb (eds.)

Scale Space and Variational Methods in Computer Vision.

Lecture Notes in Computer Science, vol. 15667, pp. 269–281.

[doi], [arxiv], [code] - J. Hertrich, T. Jahn and M. Quellmalz (2025).

Fast Summation of Radial Kernels via QMC Slicing.

International Conference on Learning Representations (ICLR 2025).

[www], [arxiv], [code], [Python library] - J. Hertrich (2024).

Fast Kernel Summation in High Dimensions via Slicing and Fourier Transforms.

SIAM Journal on Mathematics of Data Science, vol 6, pp. 1109-1137.

[doi], [arxiv], [code], [Python library] - G.S. Alberti, J. Hertrich, M. Santacesaria and S. Sciutto (2024).

Manifold Learning by Mixture Models of VAEs for Inverse Problems.

Journal of Machine Learning Research (JMLR), vol. 25, no. 202.

[www], [arxiv], [code] - P. Hagemann, J. Hertrich, M. Casfor, S. Heidenreich and G. Steidl (2024).

Mixed Noise and Posterior Estimation with Conditional DeepGEM.

Machine Learning: Science and Technology, vol. 5, no. 3.

[doi], [arxiv], [code] - P. Hagemann, J. Hertrich, F. Altekrüger, R. Beinert, J. Chemseddine and G. Steidl (2024).

Posterior Sampling Based on Gradient Flows of the MMD with Negative Distance Kernel.

International Conference on Learning Representations (ICLR 2024).

[www], [arxiv], [code], [5-min video] - J. Hertrich, C. Wald, F. Altekrüger and P. Hagemann (2024).

Generative Sliced MMD flows with Riesz kernels.

International Conference on Learning Representations (ICLR 2024).

[www], [arxiv], [code], [5-min video] - J. Hertrich, M. Gräf, R. Beinert and G. Steidl (2024).

Wasserstein Steepest Descent Flows of Discrepancies with Riesz Kernels.

Journal of Mathematical Analysis and Applications, vol. 531, 127829.

[doi], [arxiv] - M. Piening, F. Altekrüger, J. Hertrich, P. Hagemann, A. Walther and G. Steidl (2024).

Learning from Small Data Sets: Patch-based Regularizers in Inverse Problems for Image Reconstruction.

GAMM-Mitteilungen, vol. 47, e202470002.

[doi], [arxiv], [code] - F. Altekrüger, J. Hertrich and G. Steidl (2023).

Neural Wasserstein Gradient Flows for Maximum Mean Discrepancies with Riesz Kernels.

International Conference on Machine Learning (ICML 2023).

Proceedings of Machine Learning Research, vol. 202, pp. 664-690.

[www], [arxiv], [code], [5-min video] - F. Altekrüger and J. Hertrich (2023).

WPPNets and WPPFlows: The Power of Wasserstein Patch Priors for Superresolution.

SIAM Journal on Imaging Sciences, vol. 16(3), pp. 1033-1067.

[doi], [arxiv], [code] - F. Altekrüger, A. Denker, P. Hagemann, J. Hertrich, P. Maass and G. Steidl (2023).

PatchNR: Learning from Very Few Images by Patch Normalizing Flow Regularization.

Inverse Problems, vol. 39, no. 6.

[doi], [arxiv], [code] - J. Hertrich, R. Beinert, M. Gräf and G. Steidl (2023).

Wasserstein Gradient Flows of the Discrepancy with Distance Kernel on the Line.

L. Calatroni, M. Donatelli, S. Morigi, M. Prato and M. Santacesaria (eds.)

Scale Space and Variational Methods in Computer Vision.

Lecture Notes in Computer Science, vol. 14009, pp. 431-443.

[doi], [arxiv] - J. Hertrich (2023).

Proximal Residual Flows for Bayesian Inverse Problems.

L. Calatroni, M. Donatelli, S. Morigi, M. Prato and M. Santacesaria (eds.)

Scale Space and Variational Methods in Computer Vision.

Lecture Notes in Computer Science, vol. 14009, pp. 210-222.

[doi], [arxiv], [code] - P. Hagemann, J. Hertrich and G. Steidl (2023).

Generalized Normalizing Flows via Markov Chains.

Elements in Non-local Data Interactions: Foundations and Applications.

Cambridge University Press.

[doi], [arxiv], [code] - D.P.L. Nguyen, J. Hertrich, J.-F. Aujol and Y. Berthoumieu (2023).

Image super-resolution with PCA reduced generalized Gaussian mixture models in materials science.

Inverse Problems and Imaging, vol. 17, pp. 1165-1192.

[doi], [hal] - P. Hagemann, J. Hertrich and G. Steidl (2022).

Stochastic Normalizing Flows for Inverse Problems: A Markov Chains Viewpoint.

SIAM/ASA Journal on Uncertainty Quantification, vol. 10, pp. 1162-1190.

[doi], [arxiv], [code] - J. Hertrich, A. Houdard and C. Redenbach (2022).

Wasserstein Patch Prior for Image Superresolution.

IEEE Transactions on Computational Imaging, vol. 8, pp. 693-704.

[doi], [arxiv], [code] - J. Hertrich and G. Steidl (2022).

Inertial Stochastic PALM and Applications in Machine Learning.

Sampling Theory, Signal Processing, and Data Analysis, vol. 20, no. 4.

[doi], [arxiv], [code] - J. Hertrich, D.P.L. Nguyen, J.-F. Aujol, D. Bernard, Y. Berthoumieu, A. Saadaldin and G. Steidl (2022).

PCA reduced Gaussian mixture models with application in superresolution.

Inverse Problems and Imaging, vol. 16, pp. 341-366.

[doi], [arxiv], [code] - J. Hertrich, F. Ba and G. Steidl (2022).

Sparse Mixture Models Inspired by ANOVA Decompositions.

Electronic Transactions on Numerical Analysis, vol. 55, pp. 142-168.

[doi], [arxiv], [code] - J. Hertrich, S. Neumayer and G. Steidl (2021).

Convolutional Proximal Neural Networks and Plug-and-Play Algorithms.

Linear Algebra and its Applications, vol 631, pp. 203-234.

[doi], [arxiv], [code] - M. Hasannasab, J. Hertrich, F. Laus and G. Steidl (2021).

Alternatives to the EM Algorithm for ML-Estimation of Location, Scatter Matrix and Degree of Freedom of the Student-t Distribution.

Numerical Algorithms, vol. 87, pp. 77-118.

[doi], [arxiv], [code] - T. Batard, J. Hertrich and G. Steidl (2020).

Variational models for color image correction inspired by visual perception and neuroscience.

Journal of Mathematical Imaging and Vision, vol. 62, pp. 1173-1194.

[doi], [hal] - M. Hasannasab, J. Hertrich, S.Neumayer, G. Plonka, S. Setzer and G. Steidl (2020).

Parseval Proximal Neural Networks.

Journal of Fourier Analysis and Applications, vol. 26, no. 59.

[doi], [arxiv], [code] - M. Bačák, J. Hertrich, S. Neumayer and G. Steidl (2020).

Minimal Lipschitz and ∞-Harmonic Extensions of Vector-Valued Functions on Finite Graphs.

Information and Inference: A Journal of the IMA, vol. 9, pp. 935–959.

[doi], [arxiv], [code] - J. Hertrich, M. Bačák, S. Neumayer and G. Steidl (2019).

Minimal Lipschitz extensions for vector-valued functions on finite graphs.

M. Burger, J. Lellmann and J. Modersitzki (eds.)

Scale Space and Variational Methods in Computer Vision.

Lecture Notes in Computer Science, vol. 11603, pp. 183-195.

[doi], [code]

Theses

- Proximal Neural Networks and Stochastic Normalizing Flows for Inverse Problems.

PhD Thesis, 2023.

TU Berlin

[doi] - Superresolution via Student-t Mixture Models.

Master Thesis, 2020.

TU Kaiserslautern - Infinity Laplacians on Scalar- and Vector-valued Functions and Optimal Lipschitz Extensions on Graphs.

Bachelor Thesis, 2018.

TU Kaiserslautern